on

How to Write Good Unit Tests

It is probable that you have encountered numerous tests, or perhaps none at all (though this scenario is hopefully uncommon). If you belong to the former group, you may have experienced the laborious process of comprehending and constructing tests, which is painful for the eyes and patience. Despite the challenges, investing effort into test creation yields considerable benefits in the long term.

Consider a scenario where you aim to enhance your codebase. Upon conducting benchmarks, you identify sluggish and opaque segments within your code. What course of action do you take? Typically, you pinpoint these pain points and proceed to refactor with the expectation of improvement. Subsequently, after a period of refinement, say, several days or weeks, you manage to enhance performance substantially, perhaps achieving a 50% boost in efficiency—an outcome worthy of commendation in practical applications. However, what follows next? You contemplate merging your feature branch into the main codebase, yet uncertainty looms: Are you certain that all functionalities operate flawlessly? Are there comprehensive tests in place to validate that the end result remains consistent? Is there assurance that unintended consequences are not introduced to other areas of the codebase or experienced by users?

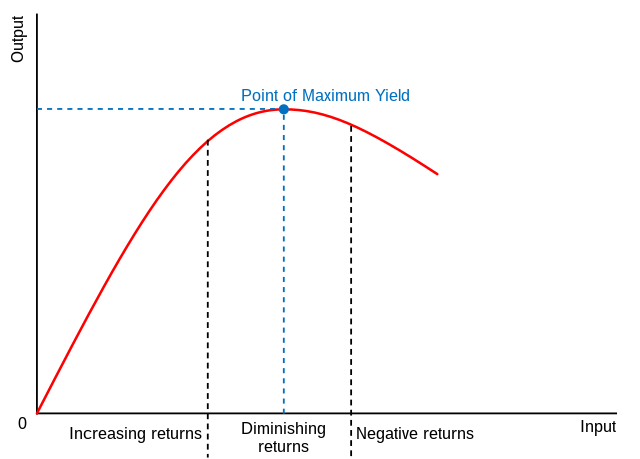

To ensure the desired behavior of the code, various types of tests are indispensable, including functional, unit, and end-to-end tests. These tests collectively elevate code quality, thereby diminishing the likelihood of faults or bugs. Nevertheless, the principle of diminishing returns applies to test coverage: the act of increasing test coverage does not indefinitely yield proportional benefits. Instead, there comes a point where additional tests cease to provide substantial returns and may even impede productivity or consume excessive time resources.

Throughout this article, I will focus exclusively on unit testing, presenting straightforward yet real-life instances of inadequate unit tests that I have encountered over time. It is worth noting that while these examples pertain specifically to unit testing, certain principles may also apply to other forms of testing.

Bad Unit Test #1 - clear input and output variables

The following test appears concise and well-structured at first glance. However,

upon closer examination, its clarity is lacking, primarily due to the ambiguous

naming of constants such as input and output.

it('test that we compute the area of a circle', () => {

const input = 10;

const output = computeArea(input);

expect(output).toBe(314,16);

});

Solution: ensure the names of the variables describe the contents of the variable, don’t care about the types.

A more comprehensible version of this test employs clear and descriptive variable names, enhancing its readability and understandability. While both versions are functionally equivalent, the second iteration is subjectively more intuitive and easier to follow. It is important to note that this improvement does not inherently affect any quantitative aspects of the test’s functionality, but it’s clear and readable.

it('should test that we compute the area of a circle', () => {

const diameter = 10;

const expectedArea = 314.16;

const result = computeArea(diameter);

expect(result).toBe(expectedArea);

});

Bad Unit Test #2 - testing too many things

Unit tests demand clarity, conciseness, and precision. Each test should focus on evaluating a single unit of functionality, such as an individual function, rather than attempting to assess multiple functions within a class in a single test case. It is crucial to maintain a narrow scope and avoid overcomplicating tests. By adhering to these principles, unit tests remain succinct, straightforward, and effective in verifying the expected behavior of specific code components.

// Code

class SomeService {

private repo: Repo;

private repo2: Repo2;

private repo3: Repo3;

func1(id: string): Object | null {

return this.repo.get(id);

}

func2(name: string): boolean {

const v = this.repo2.get(name);

const v2 = this.repo3.get(v.id);

return this.repo.update(v.id, name, v2.id);

}

func3(): boolean {

console.log("Doing something here...");

return true;

}

}

// Test case

it('test1', () => {

const SUT = new SomeService();

const res1 = SUT.func1(id);

const res2 = SUT.func2(res1.name);

SUT.func3();

expect(res2).toBe(true);

expect(SUT.func1).not.toThrow();

expect(SUT.func3).not.toThrow();

});

Solution: break the test in smaller parts, don’t test too many things in a unit test.

In the spirit of adhering to the principle of testing only one thing per unit test,

let’s refactor the existing test suite to create separate tests for each function.

This approach ensures that each test focuses exclusively on validating the behavior

of a single function func1.

it('test1', () => {

const SUT = new SomeService();

// here we test only 1 function

const result = SUT.func1(id);

expect(result).toBe(true);

expect(SUT.func1).not.toThrow();

});

Bad Unit Test #3 - testing code without logic

Examples of tests that fall into the category of bad practices include those that solely consist of return statements without any logic validation, as well as tests where everything is excessively mocked.

const realCode = (id: string) => {

return myRepo.findOne({ id });

}

// Your test

it('testing the realCode', async () => {

// Assume myRepo is mocked.

const myRepo = mocked();

const id = makeSomeFakeId();

await realCode(id);

expect(realCode).toHaveBeenCalled(1);

expect(realCode).toHaveBeenCalledWith({ id: id });

});

Indeed, the provided test appears to miss the point of testing due to the extensive mocking

involved. In this scenario, where realCode serves primarily as a wrapper for findOne and

myRepo is mocked, the utility of the test may come into question.

The purpose of testing should always be to validate the behavior and correctness of the code under test. However, in cases like this, where the test seems to primarily validate the underlying framework’s behavior (in this case, TypeORM), rather than the custom application logic, it may indicate a misunderstanding of the purpose of tests or an attempt to artificially inflate test coverage.

In either case, it’s essential to educate developers on the principles of effective testing and the importance of testing custom application logic rather than framework behavior. Tests should provide confidence in the behavior and correctness of the code, helping to identify and prevent bugs. Writing tests solely for the purpose of increasing coverage without meaningful validation undermines the value of testing and can lead to maintenance challenges in the long run.

This a common thing I’ve seen in cadebases, wondering what’s the purpose of it. Unfortunately the original author is never present to ask him.

Solution: Obviously delete the code.

Bad Unit Tests #4 - overengineering or overusing DRY

Over-engineering and overusing the DRY (Don’t Repeat Yourself) principle are common challenges encountered in both mature and immature codebases. Developers often extend these principles from the production code to their tests, aiming to avoid repetition by employing techniques like creating common functions for mocks that are invoked during setup steps. While this approach may initially result in cleaner-looking code, it can ultimately diminish readability and make it more challenging to comprehend the purpose and behavior of the test.

The primary issue with over-engineering tests in this manner is that it can obscure the actual behavior being tested. By abstracting away too much of the test’s logic the intent of the test becomes less clear. Other developers reviewing the test may struggle to understand its purpose and how it validates the code under test.

Additionally, over-engineering tests can introduce unnecessary complexity and fragility. Maintaining overly complex tests can become burdensome, as it may require significant effort to update them when the underlying code evolves.

Instead of prioritizing DRYness at all costs in tests, developers should aim for clarity, simplicity, and maintainability. While some level of abstraction and reuse is beneficial, it’s essential to strike a balance and ensure that tests remain understandable and focused on validating the intended behavior of the code. In cases where clarity is sacrificed for the sake of avoiding repetition, it may be more beneficial to prioritize readability and comprehension, even if it means duplicating a few lines of. Ultimately, the goal of testing is to provide confidence in the correctness of the code, and this should take precedence over adherence to DRY in test code.

describe('Big Test Scenario', () => {

function mock1() {

MockedRepo.mock(true);

MockedService.mock('');

}

function mock2() {

MockedRepo2.mock(false);

MockedService.mock('1');

MockedService2.mock();

}

beforeEach(() => {

mock1();

mock2();

});

it('test 1 - test something', () => {

const result = code();

expect(result).toBe(true);

expect(mock1.MockedRepo).toHaveBeenCalled());

});

it('test 2 - test something', () => {

const result = code();

expect(result).toBe(true);

expect(mock2.MockedRepo2).toHaveBeenCalled());

});

it('test 2.1 - test something', () => {

const result = code();

expect(result).toBe(false);

expect(mock2.MockedRepo2).toHaveBeenCalled());

});

});

Solution: don’t hide your mocks behind function that makes the test unclear.

describe('Big Test Scenario', () => {

it('test 1 - test something', () => {

MockedRepo.mock(true);

MockedService.mock('');

const result = code();

expect(result).toBe(true);

expect(mock1.MockedRepo).toHaveBeenCalled());

});

it('test 2 - test something', () => {

MockedRepo2.mock(false);

MockedService.mock('1');

MockedService2.mock();

const result = code();

expect(result).toBe(true);

expect(mock2.MockedRepo2).toHaveBeenCalled());

});

it('test 2.1 - test something', () => {

MockedRepo2.mock(false);

MockedService.mock('1');

MockedService2.mock();

const result = code();

expect(result).toBe(false);

expect(mock2.MockedRepo2).toHaveBeenCalled());

});

});

In the provided example, although the test may appear lengthier, it offers clarity in its structure. Each mock and setup is explicitly defined, facilitating rapid comprehension of which components are being mocked and what aspects of the code are under examination. This clarity allows developers to swiftly grasp the test’s purpose and execution without the need to decipher underlying implementation details.

General Bad Practice

Maintaining tests can be as time-consuming as managing production code. Therefore, it’s essential to strike a balance and avoid overdoing it. By maintaining a diverse set of tests aligned with the testing pyramid principles, you can minimize redundancy and ensure efficient test coverage. The primary goal is to keep maintenance efforts to a minimum without compromising quality.

Consider a scenario where you’re responsible for a service with 5000 unit tests. Even minor changes in the codebase can result in numerous test failures. Often, these failures occur due to insignificant modifications, such as adding a new parameter to a function, which has little impact on the overall functionality.

In such cases, the maintenance workload can outweigh the benefits gained from the tests. The return on investment (ROI) of tests becomes low, as significant effort is spent to address minor changes that have minimal repercussions on the end result. Therefore, it’s crucial to assess the cost-benefit ratio of tests and prioritize efforts accordingly to optimize maintenance efforts while maintaining code quality.

Solution: don’t overdo it with unit tests, strike a balance between unit and functional tests. Too many functional tests is not great either.

Conclusion

In conclusion, maintaining tests is a crucial aspect of software development that requires careful consideration and balance. While investing effort into creating more tests expecting great benefits seems reasonable, it’s essential not to overdo it. Striking a balance between different types of tests, such as unit, functional, and end-to-end tests, is key to ensuring efficient test coverage without excessive redundancy.

One common pitfall to avoid is over-engineering tests or overusing the DRY principle. While it may seem beneficial to apply the same principles from production code to tests, such as creating common functions for mocks, this can lead to decreased readability and increased maintenance burden. Additionally, it’s important to assess the cost-benefit ratio of tests, especially in scenarios where maintaining tests becomes as time-consuming as managing production code itself.

Ultimately, the goal of testing is to provide confidence in the correctness and reliability of the code while minimizing maintenance efforts. By maintaining a balanced approach to testing and prioritizing clarity, simplicity, and maintainability, developers can optimize their testing efforts and ensure code quality without sacrificing productivity.

Unfortunately, the above examples exist in a lot of codebases at some point in time. Don’t blame the developer who wrote them, it does not help. Instead put time and effort to fix them.